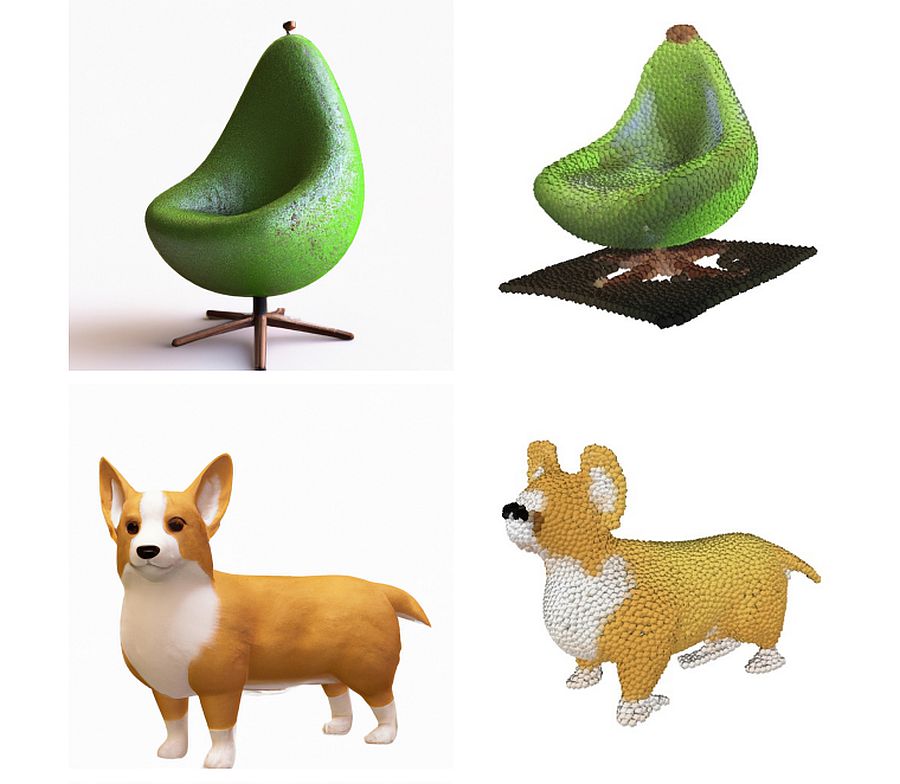

OpenAI created Point-E, a System for Generating 3D Point Clouds from texts

OpenAI open sourced Point-E, a sustem that transform sentences and words to 3D models. It is advanced artificial intelligence model from Open AI, who uses Dall-E model to create images from given sentences. Point-E adds three.dimensional form to image.

While recent work on text-conditional 3D object generation has shown promising results, the state-of-the-art methods typically require multiple GPU-hours to produce a single sample. This is in stark contrast to state-of-the-art generative image models, which produce samples in a number of seconds or minutes.

Scientists created alternative method for 3D object generation which produces 3D models in only 1-2 minutes on a single GPU. See the science paper here (PDF).

New method first generates a single synthetic view using a text-to-image diffusion model, and then produces a 3D point cloud using a second diffusion model which conditions on the generated image.

While the method still falls short of the state-of-the-art in terms of sample quality, it is one to two orders of magnitude faster to sample from, offering a practical trade-off for some use cases.

Pre-trained point cloud diffusion models, as well as evaluation code and models are published here.

SOURCE: OpenAI / Cornell University